Communication-Efficient Distributed Training at Scale

The Challenge: Modern machine learning requires training on massive datasets across distributed systems, where communication costs often dominate computational costs and can limit scalability.

My Approach: I develop methods that integrate communication compression with error compensation mechanisms to mitigate communication bottlenecks while preserving convergence guarantees.

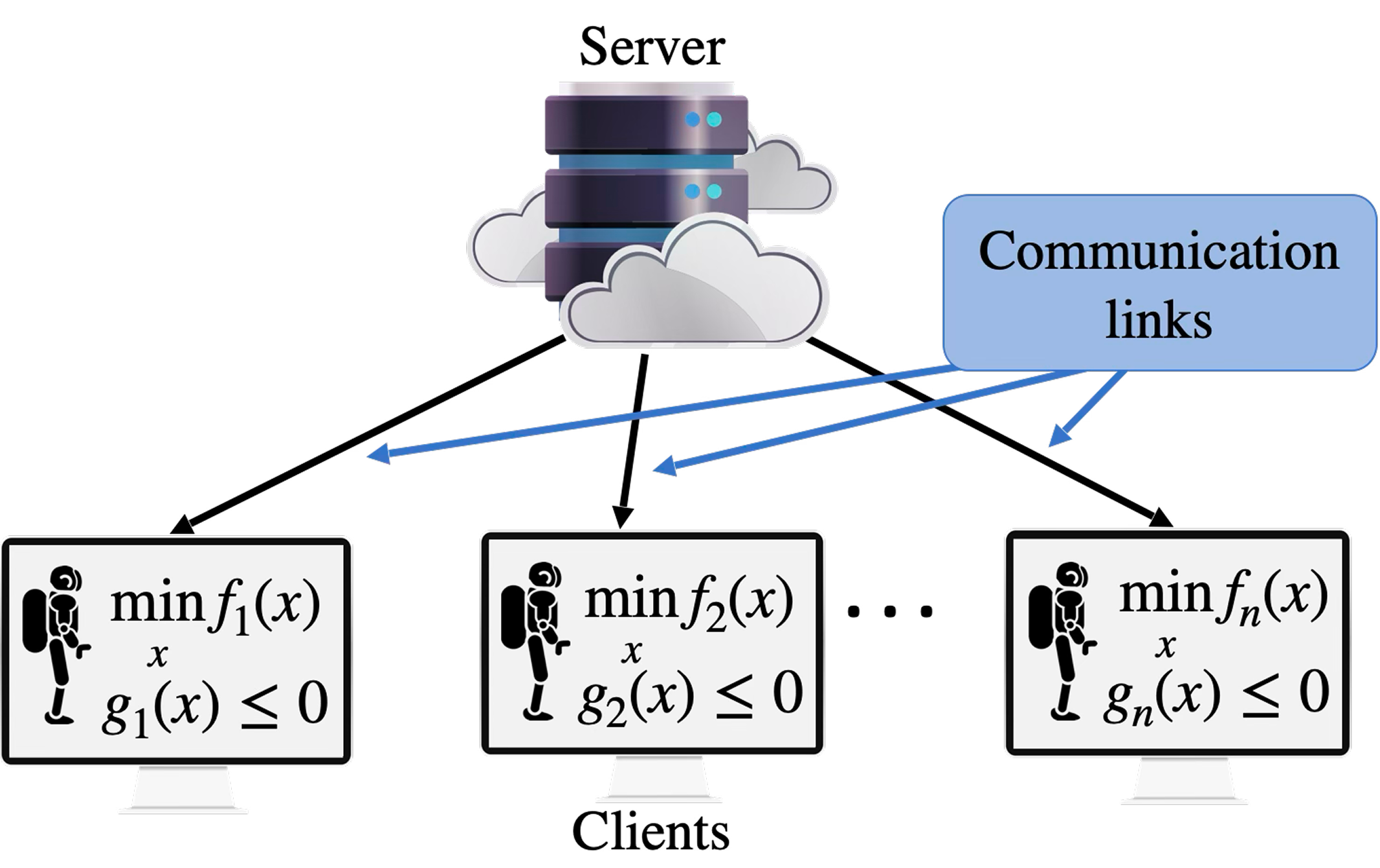

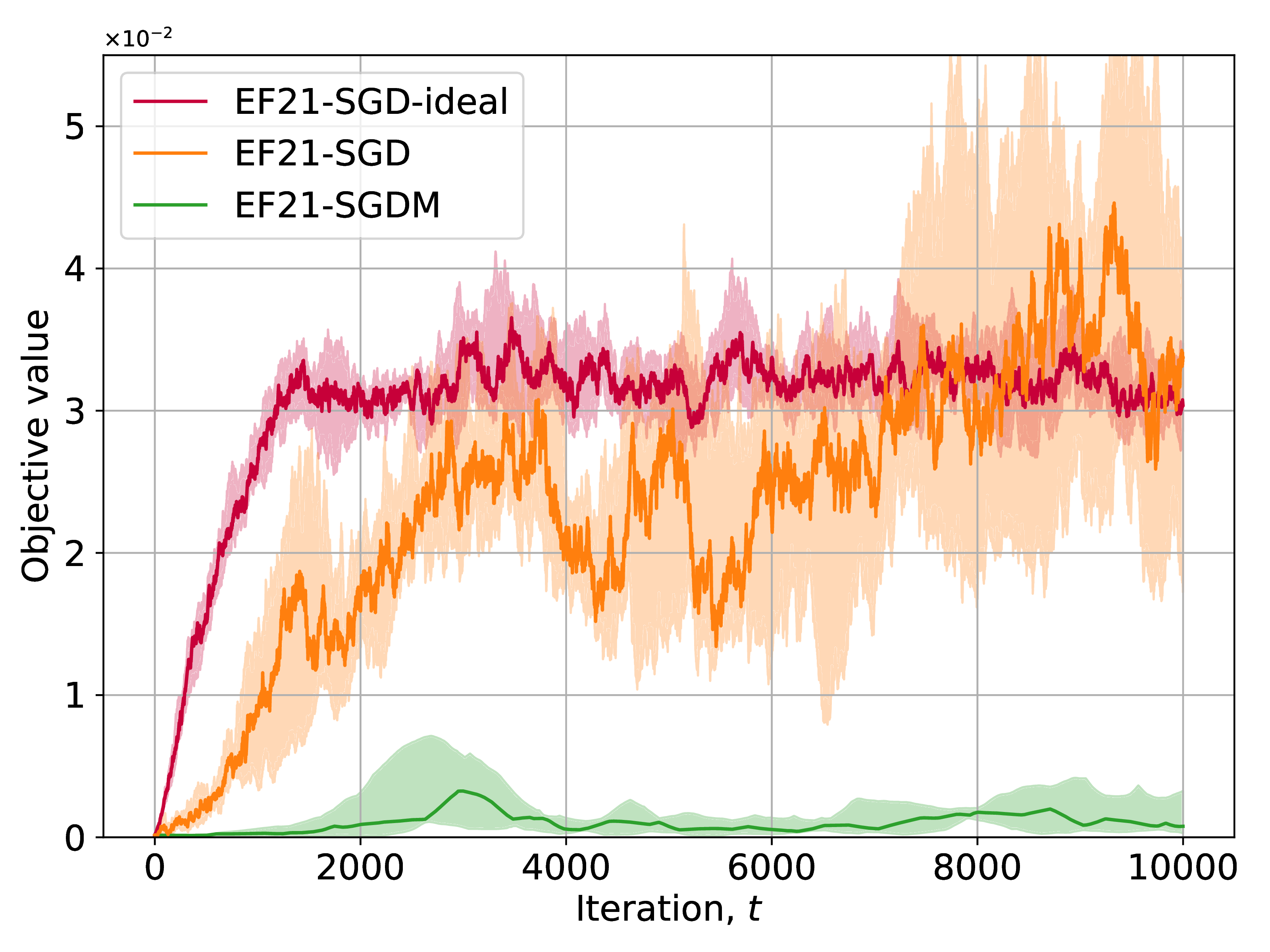

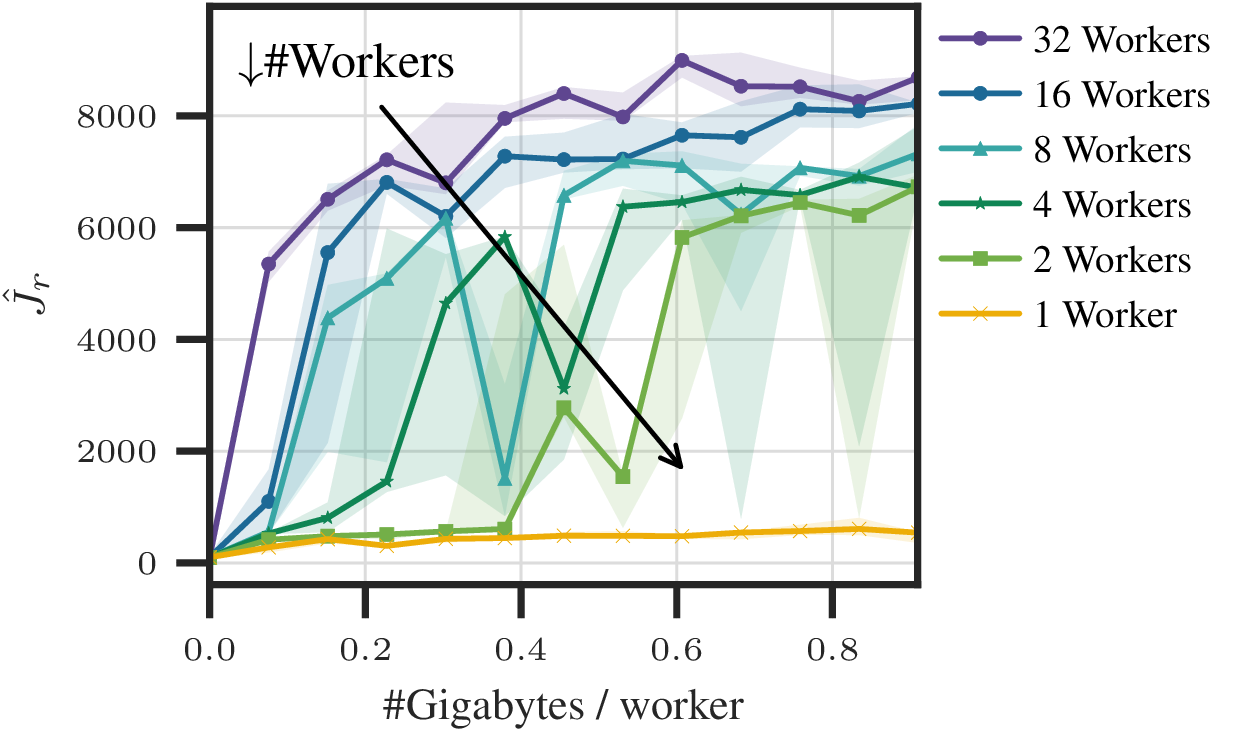

Empirical Insight: Figure 1 shows the distributed reinforcement learning deployments that motivate these systems, Figure 2 highlights how identifying the stochastic limitations of EF21 and adding momentum restores stability, and Figure 3 demonstrates Safe-EF’s worker-scaling gains that keep communication budgets in check.

Key Contributions:

- EF21: a simple, theoretically strong, and practically fast error-feedback method with optimal communication complexity (NeurIPS 2021, Oral).

- Six extensions and a comprehensive study of error feedback for modern systems (JMLR 2025).

- I identify the limitations of EF21 in stochastic settings (see Figure 2) and propose a theoretically grounded and practically relevant momentum method: momentum provably improves error feedback with stability benefits and linear speed-ups (NeurIPS 2023).

- Safe-EF: communication-efficient methods for nonsmooth constrained optimization in safety-critical settings, validated on the challenging fleet-scale Humanoid training task with PPO to meet strict safety limits. We prove the optimal communication complexity for such problems, while delivering 100× communication gains in practice. (ICML 2025).

Impact: The EF21 line of work is now a foundation for communication-efficient federated learning systems.

Selected Publications

- EF21: A New, Simpler, Theoretically Better, and Practically Faster Error Feedback. with P. Richtárik, I. Sokolov. NeurIPS (Oral), 2021.

- EF21 with Bells & Whistles: Six Algorithmic Extensions of Modern Error Feedback. with I. Sokolov, E. Gorbunov, Z. Li, P. Richtárik. Journal of Machine Learning Research, 2025.

- Momentum Provably Improves Error Feedback! with A. Tyurin, P. Richtárik. NeurIPS, 2023.

- Safe-EF: Error Feedback for Nonsmooth Constrained Optimization. with R. Islamov, Y. As. ICML, 2025.

Research Impact

The EF21 algorithm and its extensions have become fundamental building blocks for communication-efficient distributed machine learning, enabling large-scale training while maintaining theoretical guarantees and practical performance.

Figure 1. Distributed safe reinforcement learning of humanoid agents across multi-node clusters that use compressed updates.

Figure 2. EF21-SGD diverges under simple stochastic noise when using aggressive compression, whereas the momentum-enhanced EF21-SGDM remains stable near the optimum and remains efficient in more challenging tasks.

Figure 3. Safe-EF convergence across worker counts: more workers trim communication cost even as gains taper, highlighting the method's scalability.