Data Efficiency: Robust Algorithms for Challenging Statistical Conditions

The Challenge: Sample efficiency and training stability are major bottlenecks in reinforcement learning, particularly when dealing with heavy-tailed noise, non-stationarity and limited data scenarios that are common in real-world applications.

My Approach: I investigate how statistical properties of data influence training dynamics, with emphasis on developing robust algorithms that maintain performance under challenging conditions while remaining tuning-free.

Key Contributions:

- Optimal sample efficiency guarantees for SGD under infinite variance noise (NeurIPS OptML Workshop, 2025 (Oral)). Extended version is under in a journal.

- Normalized SGD methods with high-probability fast convergence under heavy-tailed noise (AISTATS 2025).

- Breaking sample efficiency limits for stochastic policy gradient methods, with improved theory and strong continuous-control results (ICML 2023a; ICML 2023b).

- Hessian clipping: optimal second-order optimization under heavy-tailed noise (NeurIPS 2025).

- Understanding the fundamental performance gap between tuning free SGD and modern adaptive methods. (NeurIPS 2023).

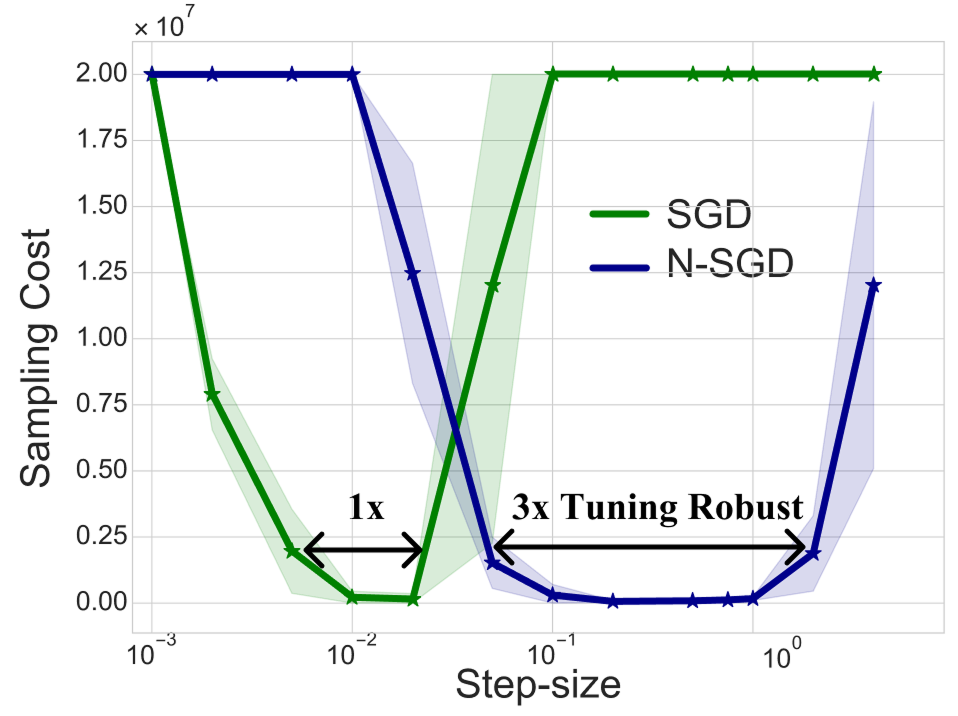

Impact: In practice, our proposed methods widen the range of stable step-sizes by several factors compared to standard SGD in challenging benchmarks like the Humanoid task, demonstrating both theoretical rigor and practical effectiveness.

Humanoid agent training.

Selected Publications

-

Can SGD Handle Heavy-Tailed Noise? with F. Hübler, G. Lan. NeurIPS OptML Workshop, 2025 (Oral).

-

From Gradient Clipping to Normalization for Heavy-Tailed SGD. with F. Hübler, N. He. AISTATS, 2025.

-

Stochastic Policy Gradient Methods: Improved Sample Complexity for Fisher-non-degenerate Policies. with A. Barakat, A. Kireeva, N. He. ICML, 2023a.

-

Reinforcement Learning with General Utilities: Simpler Variance Reduction and Large State-Action Space. with A. Barakat, N. He. ICML, 2023b.

-

Second-order Optimization under Heavy-Tailed Noise: Hessian Clipping and Sample Complexity Limits. with A. Sadiev, P. Richtárik. NeurIPS, 2025.

-

Two Sides of One Coin: the Limits of Untuned SGD and the Power of Adaptive Methods. with J. Yang, X. Li, N. He. NeurIPS, 2023

Research Impact

These robust algorithms significantly improve training stability and sample efficiency, making reinforcement learning more practical for real-world applications with challenging statistical conditions.